Setting up my homelab

A few years ago, I got a nice little year-end bonus from work, and decided to give myself a treat. I bought a cheap Synology NAS device with a pair of fast SSD drives. I didn’t have any special purpose in mind for it at the time. But I was juggling multiple laptops, and sharing files between them was a pain. It was especially useful when the iPad came into the picture as that device is downright poor about sharing, and I needed to move art and reference photos between it and the PCs.

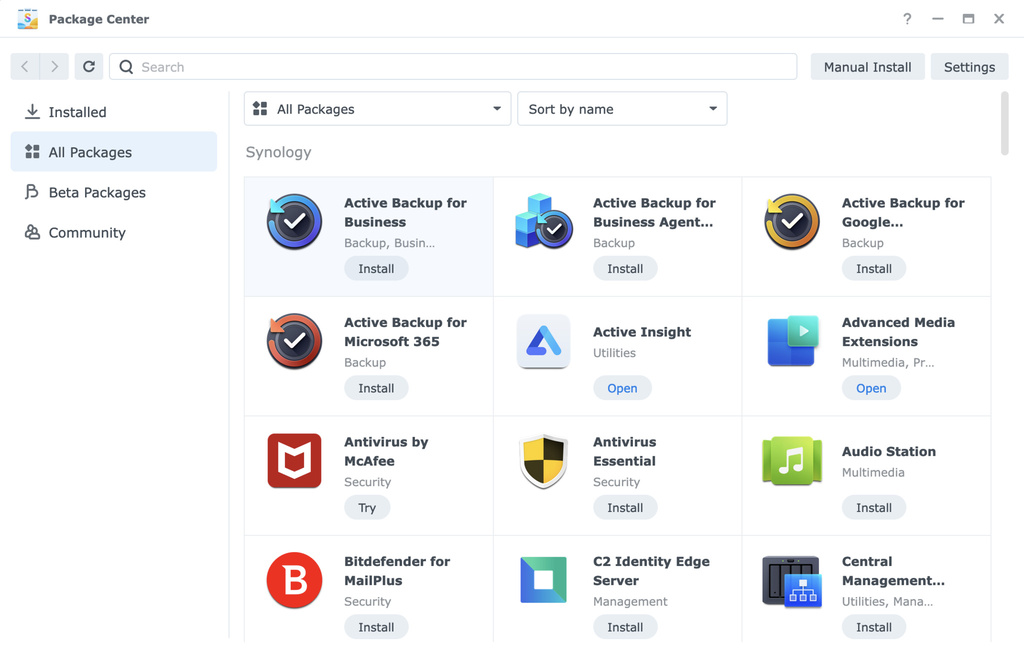

A couple years ago I began to expand the services I was running on the NAS. It’s a little Linux computer, capable of running all kinds of stuff. Synology has a little app store with a number of offerings, such as photo servers, media servers, backup services, and so on. I experimented with several of those and eventually settled into a pleasant groove.

This year I decided I was going to expand the library for my chosen media server. It wasn’t long before I exceeded the storage available on those little SSDs, so I upgraded to a newer NAS and bigger drives. Along the way, I have been paying more attention to the device and considering what additional capabilities I might wring from having a little Linux server on my network.

I have this thing really singing now, and I am quite pleased. Today, I am going to document and share that journey.

Emby

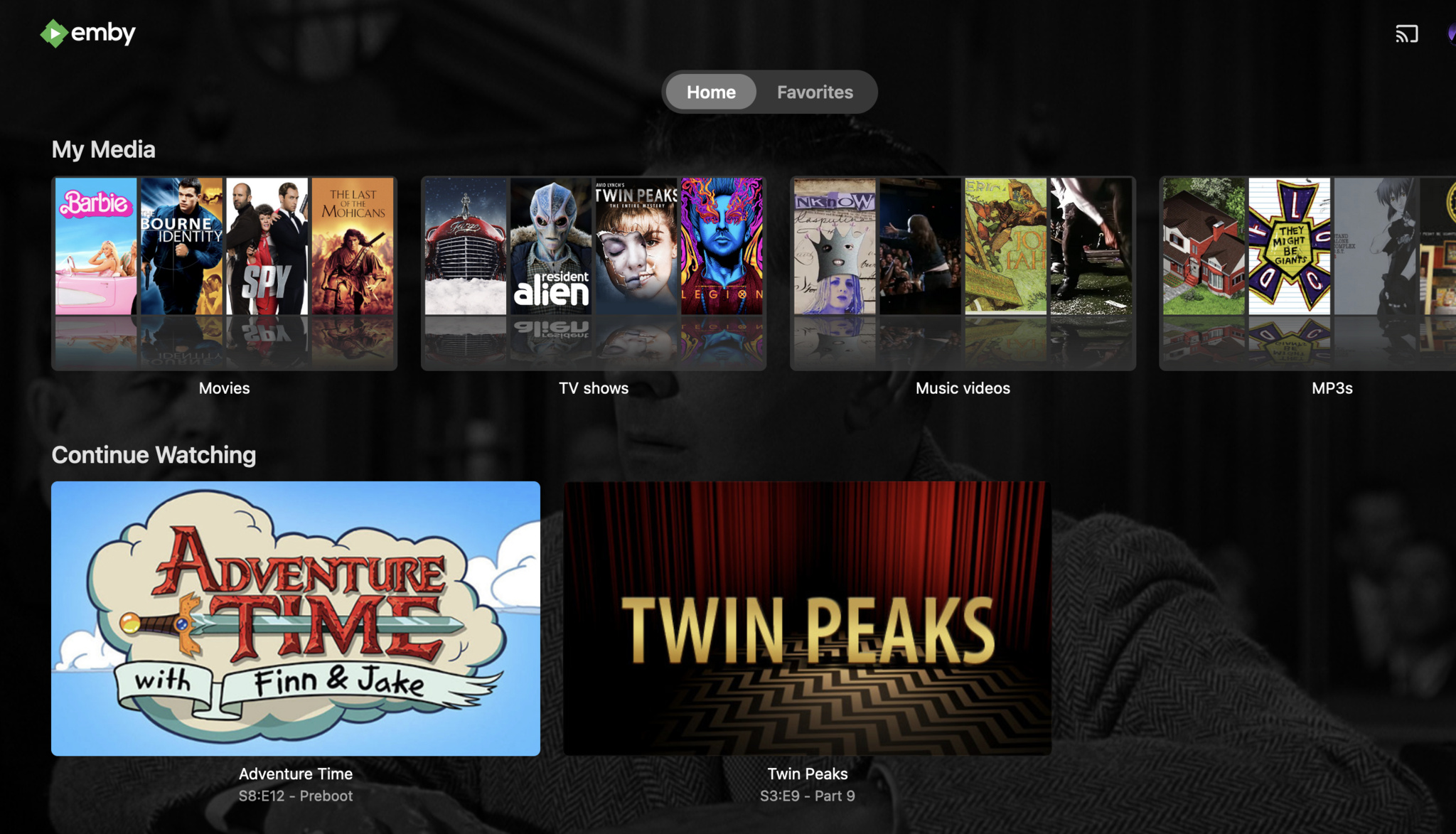

I’ve been running Emby for a long time, but the majority of that span it was just a way for my mom to watch rips of her outsized DVD collection. I had only a few odd movies I’d collected over time. I’m not sure what triggered me to build out my media library, but I’ve been bulking up fast this year. I have hundreds of movies on there now, although still just a small subset of the piles and piles I purchased over the years.

Emby does just about everything I might want in a media server. It’s snappy, attractive, and reliable. It queries metadata about your media so you can cross reference things by actors and directors. It supports subtitling and transcoding.

But, when I wanted to start watching the movies with my girlfriend, it set me off down a long road of figuring out how to do so without exposing myself to the wider world.

Wireguard

At first, my NAS was completely secure, or at least as secure as my router. With no ports forwarded, it was only addressable by LAN devices. Synology has a built-in service called QuickConnect that will let you access your NAS from outside networks. I decided I didn’t trust that, so I took advice (and a lot of coaching) from a friend and set up Wireguard. It took me a long time understand this, but it’s just a private VPN service you can host yourself.

I designate certain client devices as trusted, crafting special configuration files for them. They authenticate with the server using asymmetric key pairs, tunneling through a single open port on the router. The Wireguard service does not answer at all unless a message is structured and encrypted the way it expects, making it a quiet protocol, undiscoverable by nmap sniffing.

DDNS and Reverse proxy

My ISP doesn’t give me a static IP address, so I use the dynamic DNS (DDNS) service on the NAS to update the synology.me DNS server with my current address. Someone on the Internet can use that name to find me (provided they have the right Wireguard tunnel configuration). That name also has a Let’s Encrypt TLS certificate attached, so clients can authenticate the connection using HTTPS.

I then run the built-in reverse proxy service to make subdomains within my “global” domain map to the individual services. It forwards the traffic to the right machine and port. The nice thing is it can even send remote HTTPS traffic to a local HTTP port, so the local services don’t need to understand the TLS certificate themselves. And because the certificate has the wildcard setting, all of these subdomains verify for all web browsers.

DNS

Or rather, they verify with almost all the web browsers. For the greater world, that public IP address is valid, but here on my LAN, it’s the wrong choice. Local clients should be using local IPs. I am running a DNS server on the NAS that can map all those subdomains to local addresses. So if you’re using my DNS, those names all answer with values in the 192.168.0.0/16 range. But to the outside world, those names don’t mean anything at all. DDNS has a single, top-level name that points to the router, but the subdomains are nonsense.

Then I got tricky. The Wireguard client configuration allows you to set the DNS server a client uses when connected. So a remote client will start off getting the router’s public address, negotiate a Wireguard connection, and then my DNS service becomes responsible for resolving names, and it will answer with local values for things in my domain. Those IPs are only usable by clients on the LAN, which running the tunnel makes you. It works great! The same URLs work for local and remote clients, but mean nothing to anyone outside my VPN.

Docker

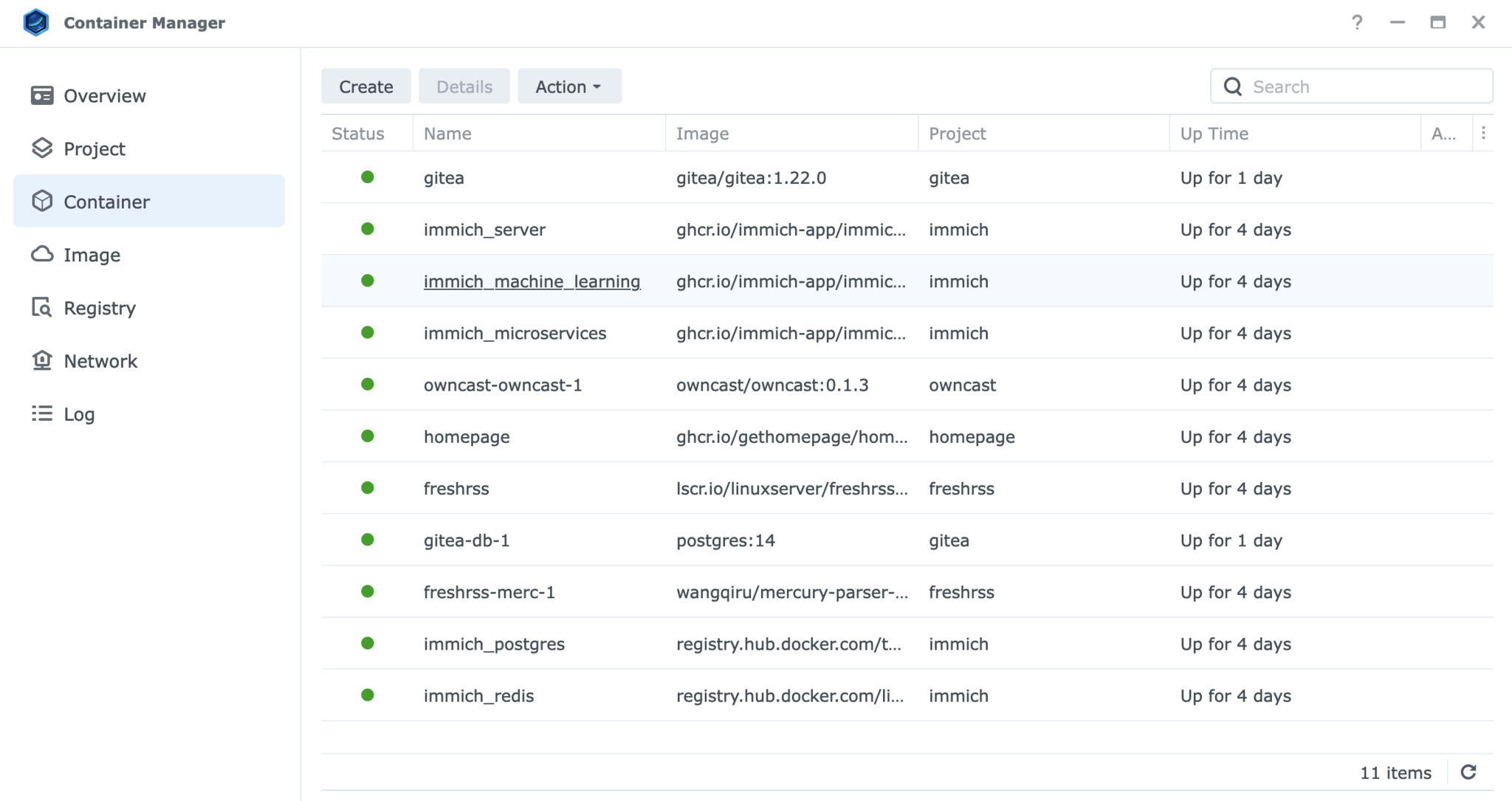

I let things rest there for a long while. It doesn’t take much digging in Synology circles before you find people talking about Docker, a kind of lightweight virtualization software, with thousands of additional packages available. Synology provides a Docker interface called Container Manager, but it has a level of complexity well beyond the friendly app store that is Package Center. After a few false starts, though, I started getting things to work.

Docker Compose configuration files aren’t as easy to get working as pre-built binaries, but I eventually got into the swing of things. Getting a handle on volume mappings (local real path:path the image expects) and port mapping (real port:port the image expects) were the keys. It downloads and builds the program, maps local resources into it, and runs the service.

FreshRSS

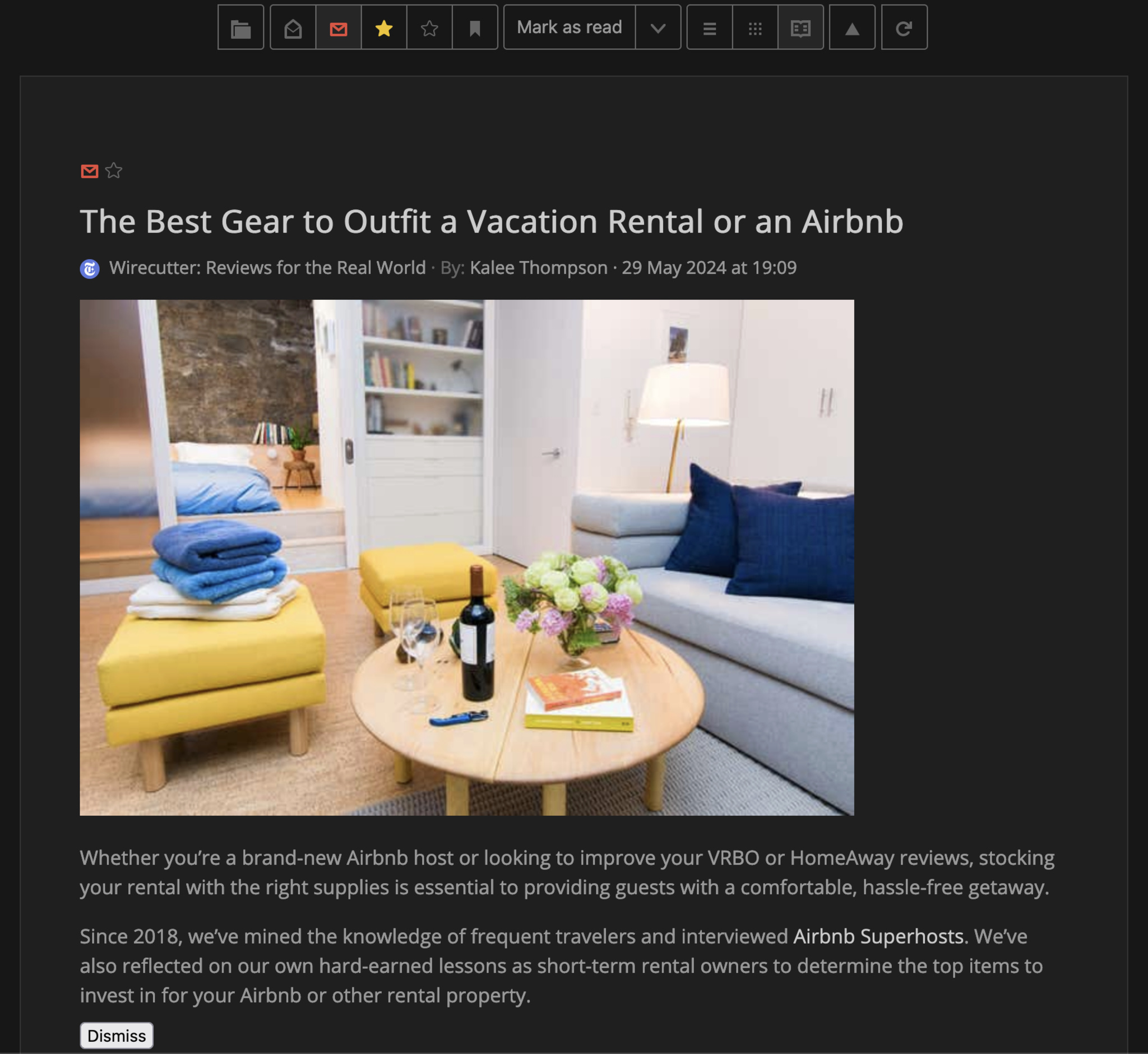

Like many people, I am a refugee from Google’s 2013 killing of Google Reader. Over the intervening years, I have used a number of alternatives, but I’ve never been fully satisfied. One thing you can do with a home server is run a little RSS server instance. There’s a few of them available, but FreshRSS appears to be the popular choice.

It’s quite nice! I was able to import an OPML of my active feeds, and it worked straight away. It has plugin support I used to tweak the interface some, integrate Mercury Reader, and more.

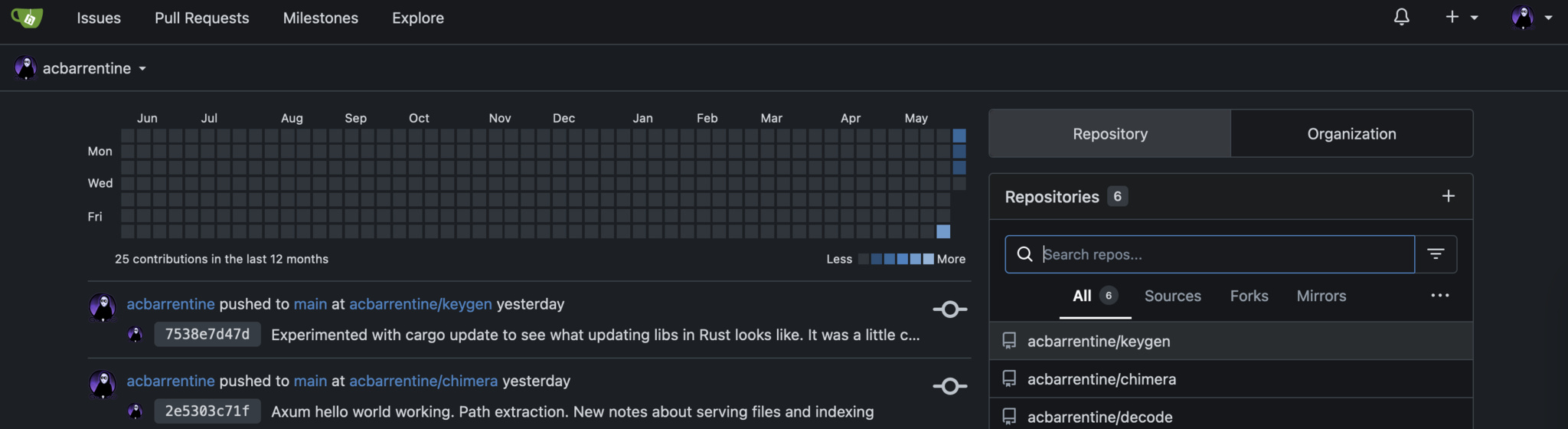

Gitea

Source control, dude! Gitea is an offshoot of Gogs, a git server built in Go, for those more comfortable with using their own server than a public service like Github. It’s fast, private, and reliable. And because it’s behind my reverse proxy, clients are even able to access it over https.

For anyone concerned with the fact my source code is all local, I should mention I have an offsite backup arrangement as well.

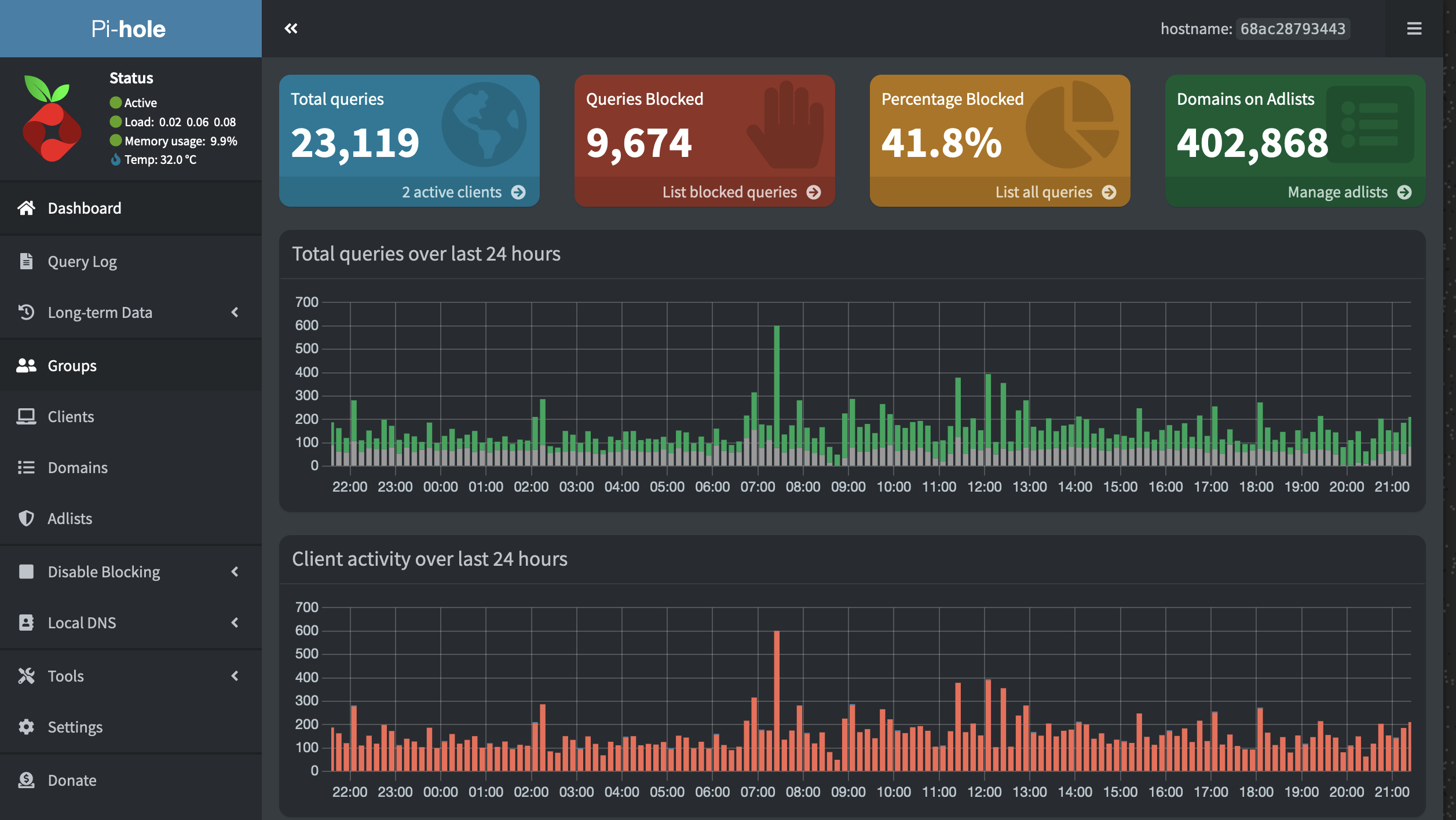

Pihole

The next job was to kill the ads. Pihole was surprisingly low drama to install and get running. It acts as a DNS server, but short circuits requests to ad and tracking servers. Because it is its own DNS server, it has the same ability to map those custom subdomains to the local IPs. I am running this on the old SSD NAS, which now gives me two DNS servers on the network. Everyone recommends this kind of redundant setup so you can reboot a server without breaking all your clients — a problem I ran into with the old arrangement.

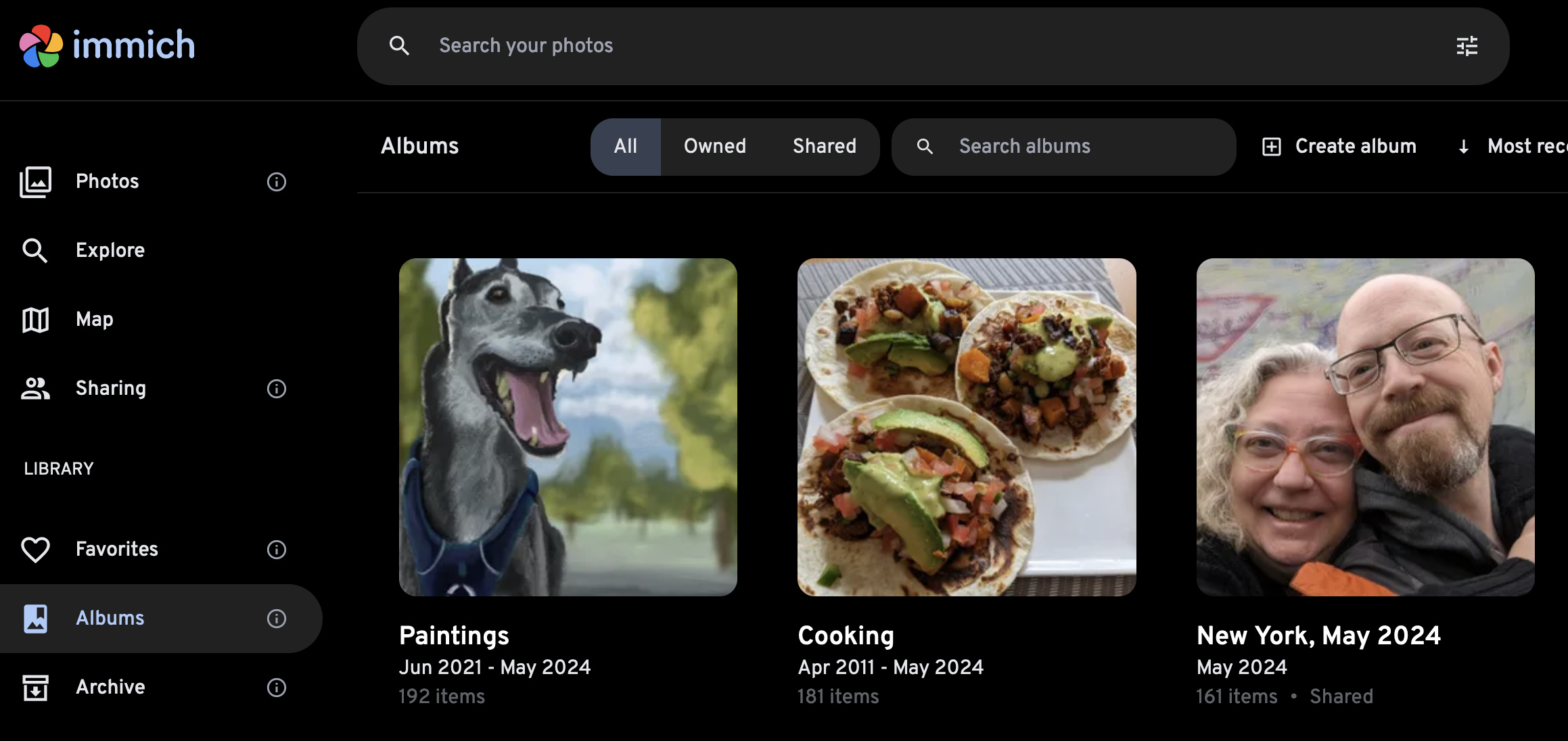

Immich

During my vacation to Rapa Nui last year, Google sent me a notice I was threatening my account storage limit. Instead of paying for more, I opted to use the takeout service and host all the photos myself. At the time I used Synology Photos to manage them, but I was never satisfied with that piece of software.

Searches for “homelab photo server” or “self-hosted photo server” led me to Immich. At first it sounded too heavy for my purposes, but when the alternatives I was trying failed to work, I gave it a try. And I’m in love! It is slick and sophisticated in a way I don’t expect for open source software. It is attractive and fast. The facial recognition is especially well done, with no false positives.

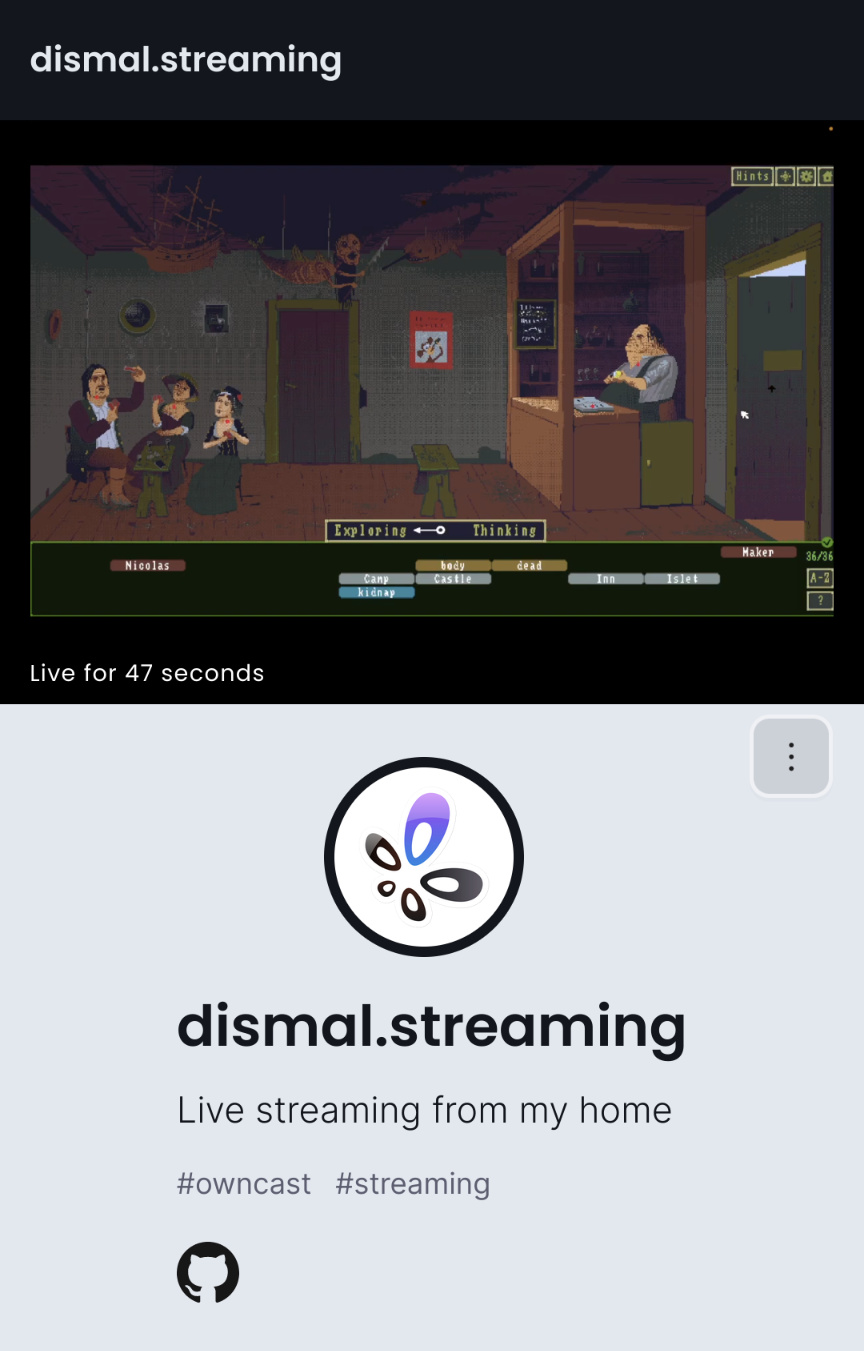

Owncast

I wanted to play a game with my girlfriend and found sharing video over chat lacking. It didn’t include the game audio. I started experimenting with OBS, which works well for client recording. For hosting the stream, I used Twitch at first, but I didn’t want to share the stream with the world. So I checked to see if there is a self-hosted alternative, and there sure is.

Owncast was trivial to install and configure and worked immediately. It’s attractive and consumes few server resources. I give a thumbs up to this tool.

Homepage

This was kind of nerdy, but around this time I started to have a whole mess of local services, and thought it would be nice to try out one of the homelab dashboard services I saw people talking about. The self-contained nature of Docker images makes experimentation quite easy, without concern you are littering your server with detritus. Heimdall was pretty enough but not much more useful than a bookmark page. Dashy was a lot more advanced, but I found it hard to work with and less attractive.

I settled on Homepage. Aside from the fact I need to edit it in VIM, it’s the right combination of easy to use and attractive. And, it supports plugging in additional data. I even spent some time writing a little script (and cron job) to rotate the wallpaper.

Future: Making my own Docker container

Now I’ve got the bug. I want to understand Docker better, and I think my next effort will be to make my own image. I want to build a service that has felt missing to me, a Markdown-aware web server. (There are a few competing apps, including a Rust one, but none of them quite match my use case.) I am building it on the Mac, pushing the source to my Gitea server, and when it’s done, I will figure out how to make a Docker image out of it and host it myself. When it’s looking good, I plan to share it with the world.